FusionInsight HD 客户端安装与使用

本文共 12388 字,大约阅读时间需要 41 分钟。

FusionInsight HD针对不同服务提供了Shell脚本,供开发维护人员在不同场景下登录其对应的服务维护客户端完成对应的维护任务。

1、前提条件

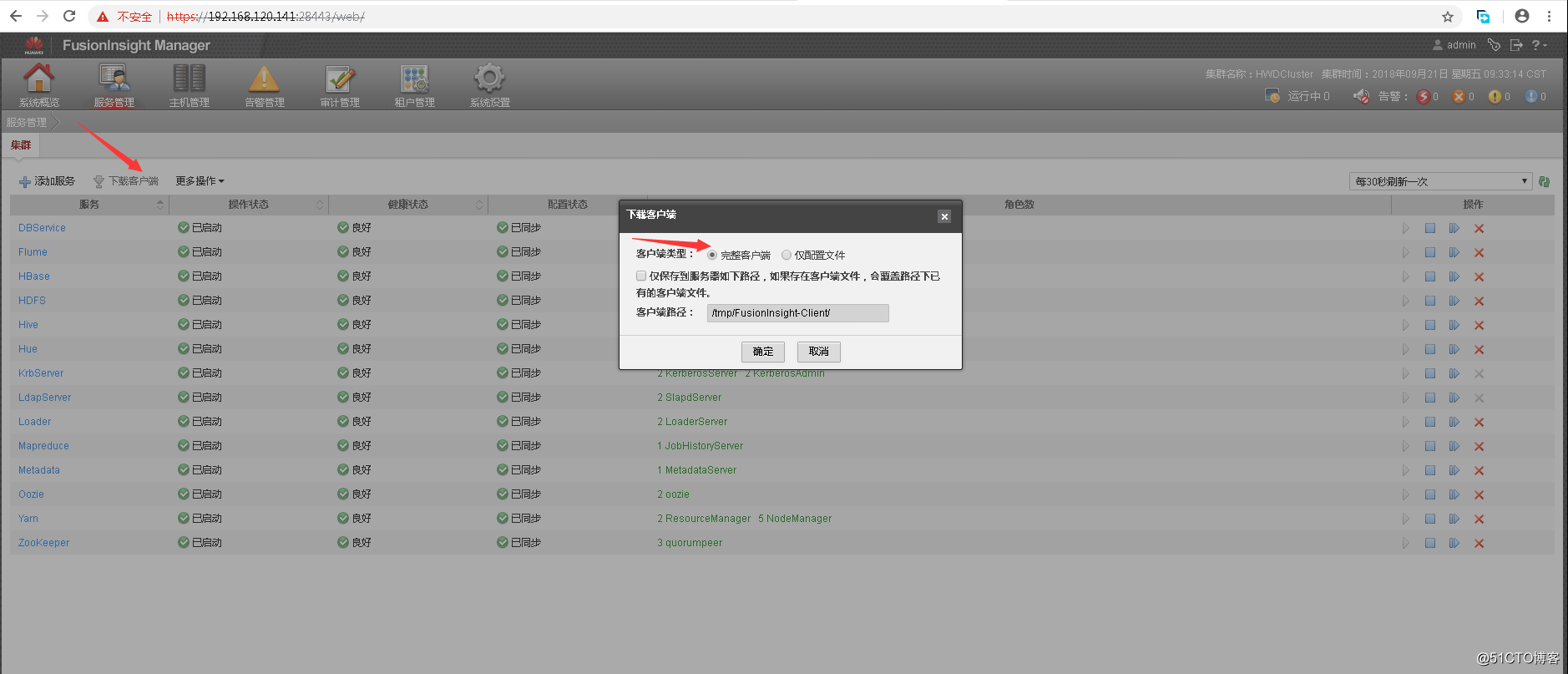

2、获取客户端软件包

登录FusionInsight Manager系统,单击“服务管理”,在菜单栏中单击“下载客户端”,弹出“客户端类型”信息提示框。“客户端类型”勾选“完整客户端”。

3、安装客户端软件

将客户端软件包上传至客户端服务器,解压软件包:

[root@hdp02 ~]# mkdir /opt/client[root@hdp02 ~]# cd /opt/client[root@hdp02 client]# tar -xf FusionInsight_Services_Client.tar [root@hdp02 client]# sha256sum -c FusionInsight_Services_ClientConfig.tar.sha256FusionInsight_Services_ClientConfig.tar: OK[root@hdp02 client]# tar -xf FusionInsight_Services_ClientConfig.tar

进入客户端目录,进行安装。这里将客户端安装至/opt/huawei/client目录:

[root@hdp02 client]# cd FusionInsight_Services_ClientConfig[root@hdp02 client]# mkdir -p /opt/huawei/client[root@hdp02 FusionInsight_Services_ClientConfig]# ./install.sh /opt/huawei/client/[18-09-20 10:20:58]: Pre-install check begin...[18-09-20 10:20:58]: Checking necessary files and directory.[18-09-20 10:20:58]: Checking NTP service status.[18-09-20 10:20:58]: Error: Network time protocol(NTP) not running. Please start NTP first.[root@hdp02 FusionInsight_Services_ClientConfig]# ./install.sh /opt/huawei/client/[18-09-20 10:31:50]: Pre-install check begin...[18-09-20 10:31:50]: Error: "/opt/huawei/client/" is not empty.[root@hdp02 FusionInsight_Services_ClientConfig]# ./install.sh /opt/huawei/client/[18-09-20 10:32:11]: Pre-install check begin...[18-09-20 10:32:11]: Checking necessary files and directory.[18-09-20 10:32:11]: Checking NTP service status.[18-09-20 10:32:11]: Checking "/etc/hosts" config.[18-09-20 10:32:11]: Pre-install check is complete.[18-09-20 10:32:11]: Precheck on components begin...[18-09-20 10:32:11]: Precheck Loader begin ...[18-09-20 10:32:11]: Checking java environment.[18-09-20 10:32:11]: Precheck on components is complete.[18-09-20 10:32:11]: Deploy "dest_hosts" begin ...[18-09-20 10:32:11]: Warning: "hwd02" already exists in "/etc/hosts", it will be overwritten.[18-09-20 10:32:11]: Warning: "hwd04" already exists in "/etc/hosts", it will be overwritten.[18-09-20 10:32:11]: Warning: "hwd05" already exists in "/etc/hosts", it will be overwritten.[18-09-20 10:32:11]: Warning: "hwd01" already exists in "/etc/hosts", it will be overwritten.[18-09-20 10:32:11]: Warning: "hwd03" already exists in "/etc/hosts", it will be overwritten.[18-09-20 10:32:11]: Deploy "dest_hosts" is complete.[18-09-20 10:32:11]: Install public library begin ...[18-09-20 10:32:11]: Install Fiber begin ...[18-09-20 10:32:11]: Deploy Fiber client to /opt/huawei/client//Fiber[18-09-20 10:32:11]: Create Fiber env file "/opt/huawei/client//Fiber/component_env".[18-09-20 10:32:11]: Create Fiber conf file "/opt/huawei/client//Fiber/conf/fiber.xml"[18-09-20 10:32:11]: Fiber installation is complete.[18-09-20 10:32:11]: Public library installation is complete.[18-09-20 10:32:11]: Install components client begin ...[18-09-20 10:32:11]: Install HBase begin ...[18-09-20 10:32:11]: Copy /opt/client/FusionInsight_Services_ClientConfig/HBase/FusionInsight-HBase-1.3.1.tar.gz to /opt/huawei/client//HBase./opt/client/FusionInsight_Services_ClientConfig/HBase[18-09-20 10:32:11]: Copy HBase config files to "/opt/huawei/client//HBase/hbase/conf"[18-09-20 10:32:11]: Create HBase env file "/opt/huawei/client//HBase/component_env".[18-09-20 10:32:11]: Setup fiber conf started.[18-09-20 10:32:12]: HBase installation is complete.[18-09-20 10:32:12]: Install HDFS begin ...[18-09-20 10:32:12]: Copy /opt/client/FusionInsight_Services_ClientConfig/HDFS/FusionInsight-Hadoop-2.7.2.tar.gz to /opt/huawei/client/HDFS./opt/client/FusionInsight_Services_ClientConfig/HDFS[18-09-20 10:32:12]: Copy Hadoop config files to "/opt/huawei/client/HDFS/hadoop/etc/hadoop"[18-09-20 10:32:12]: Create Hadoop env file "/opt/huawei/client/HDFS/component_env".[18-09-20 10:32:12]: HDFS installation is complete.[18-09-20 10:32:12]: Install Hive begin ...[18-09-20 10:32:12]: Deploy Hive client directory to /opt/huawei/client//Hive./opt/client/FusionInsight_Services_ClientConfig/Hive[18-09-20 10:32:12]: start to generate /opt/huawei/client//Hive/Beeline/conf/beeline_hive_jaas.conf for beeline...[18-09-20 10:32:12]: generated /opt/huawei/client//Hive/Beeline/conf/beeline_hive_jaas.conf[18-09-20 10:32:12]: Deploy HCatalog client to /opt/huawei/client//Hive[18-09-20 10:32:13]: URI is 'jdbc:hive2://192.168.110.157:24002,192.168.110.158:24002,192.168.110.159:24002/\;serviceDiscoveryMode=zooKeeper\;zooKeeperNamespace=hiveserver2\;sasl.qop=auth-conf\;auth=KERBEROS\;principal=hive/hadoop.hadoop.com@HADOOP.COM'.[18-09-20 10:32:13]: Create Hive env file "/opt/huawei/client//Hive/component_env"./opt/huawei/client/Hive/Beeline[18-09-20 10:32:13]: Setup fiber conf started.[18-09-20 10:32:13]: Hive installation is complete.[18-09-20 10:32:13]: Install JDK begin ...[18-09-20 10:32:13]: Decompress jdk.tar.gz to /opt/huawei/client//JDK./opt/client/FusionInsight_Services_ClientConfig/JDK[18-09-20 10:32:19]: Create JRE env file "/opt/huawei/client//JDK/component_env".[18-09-20 10:32:19]: JDK installation is complete.[18-09-20 10:32:19]: Warning: /opt/client/FusionInsight_Services_ClientConfig/JDK/VERSION not exist.[18-09-20 10:32:19]: Install KrbClient begin ...[18-09-20 10:32:19]: Copy /opt/client/FusionInsight_Services_ClientConfig/KrbClient/FusionInsight-kerberos-1.15.2.tar.gz to /opt/huawei/client//KrbClient./opt/client/FusionInsight_Services_ClientConfig/KrbClient[18-09-20 10:32:19]: Copy KRB config files to "/opt/huawei/client//KrbClient/kerberos/conf"[18-09-20 10:32:19]: Copy security script files to "/opt/huawei/client//KrbClient/kerberos/bin"[18-09-20 10:32:19]: Create KRB env file "/opt/huawei/client//KrbClient/component_env".[18-09-20 10:32:19]: KrbClient installation is complete.[18-09-20 10:32:19]: Install Loader begin ...Install loader client successfully.[18-09-20 10:32:19]: Loader installation is complete.[18-09-20 10:32:19]: Install Oozie begin .../opt/client/FusionInsight_Services_ClientConfig/Oozie/install.sh: line 48: local: can only be used in a function[18-09-20 10:32:19]: Copy /opt/client/FusionInsight_Services_ClientConfig/Oozie/install_files to /opt/huawei/client//Oozie./opt/client/FusionInsight_Services_ClientConfig/Oozie/install.sh: line 69: [: -eq: unary operator expected[18-09-20 10:32:20]: Copy Oozie config files to "/opt/huawei/client//Oozie/oozie-client-4.2.0/conf"[18-09-20 10:32:20]: Create Oozie env file "/opt/huawei/client//Oozie/component_env"./opt/client/FusionInsight_Services_ClientConfig/Oozie/install.sh: line 109: [: -eq: unary operator expected[18-09-20 10:32:20]: Oozie installation is complete.[18-09-20 10:32:20]: Install Yarn begin ...[18-09-20 10:32:20]: Copy Yarn config files to "/opt/huawei/client/Yarn/config"[18-09-20 10:32:20]: Yarn installation is complete.[18-09-20 10:32:20]: Install ZooKeeper begin ...[18-09-20 10:32:20]: Copy /opt/client/FusionInsight_Services_ClientConfig/ZooKeeper/FusionInsight-Zookeeper-3.5.1.tar.gz to /opt/huawei/client//ZooKeeper./opt/client/FusionInsight_Services_ClientConfig/ZooKeeper[18-09-20 10:32:20]: Create Zookeeper env file "/opt/huawei/client//ZooKeeper/component_env".[18-09-20 10:32:20]: Copy zookeeper config files to /opt/huawei/client//ZooKeeper/zookeeper/conf[18-09-20 10:32:20]: ZooKeeper installation is complete.[18-09-20 10:32:20]: Components client installation is complete.

4、验证安装

配置客户端环境变量:

[root@hdp02 ~]# source /opt/huawei/client/bigdata_env

设置kinit认证:

[root@hdp02 client]# kinit adminPassword for admin@HADOOP.COM: #输入admin用户登录密码(与登录集群的用户密码一致)[root@hdp02 client]# klist Ticket cache: FILE:/tmp/krb5cc_0Default principal: admin@HADOOP.COMValid starting Expires Service principal09/21/2018 09:43:33 09/22/2018 09:43:31 krbtgt/HADOOP.COM@HADOOP.COM

验证hdfs:

[root@hdp02 client]# hdfs dfs -ls /Found 12 itemsdrwxrwxrwx - hdfs hadoop 0 2018-09-19 13:36 /app-logsdrwxrwx--- - hive hive 0 2018-09-19 13:40 /appsdrwxr-xr-x - hdfs hadoop 0 2018-09-19 13:36 /datasetsdrwxr-xr-x - hdfs hadoop 0 2018-09-19 13:36 /datastoredrwxr-x--- - flume hadoop 0 2018-09-19 13:36 /flumedrwx------ - hbase hadoop 0 2018-09-21 10:01 /hbasedrwxrwxrwx - mapred hadoop 0 2018-09-19 13:36 /mr-historydrwxrwxrwt - spark2x hadoop 0 2018-09-19 13:36 /spark2xJobHistory2xdrwxrwxrwt - spark hadoop 0 2018-09-19 13:36 /sparkJobHistorydrwx--x--x - admin supergroup 0 2018-09-19 13:36 /tenantdrwxrwxrwx - hdfs hadoop 0 2018-09-19 13:36 /tmpdrwxrwxrwx - hdfs hadoop 0 2018-09-19 13:39 /user

5、批量配置客户端

这里的批量配置客户端是指将客户端软件安装在nfs服务器上,其他客户端通过挂载nfs共享到指定的位置,然后配置ntp服务和hosts文件即可。

5.1 NFS服务器安装客户端

- NTP配置

[root@onas FusionInsight_Services_ClientConfig]# vi /etc/ntp.confserver 192.168.120.140 key 1 prefer burst iburst minpoll 4 maxpoll 4restrict -4 default kod notrap nomodify nopeer noqueryrestrict -6 default kod notrap nomodify nopeer noqueryrestrict 127.0.0.1restrict ::1tinker step 0tinker panic 0disable monitordisable kernelenable authkeys /etc/ntp/ntpkeystrustedkey 1requestkey 1controlkey 1--启动ntp服务[root@onas FusionInsight_Services_ClientConfig]# systemctl start ntpd[root@onas FusionInsight_Services_ClientConfig]# systemctl enable ntpd

- HOSTS配置

[root@onas ~]# vi /etc/hosts192.168.110.158 hwd02192.168.110.160 hwd04192.168.110.161 hwd05192.168.110.157 hwd01192.168.110.159 hwd03192.168.120.140 casserver

-

安装客户端

[root@onas FusionInsight_Services_ClientConfig]# ./install.sh /u02/huawei/client[18-09-21 11:41:48]: Pre-install check begin...[18-09-21 11:41:48]: Checking necessary files and directory.[18-09-21 11:41:48]: Checking NTP service status.[18-09-21 11:41:48]: Checking "/etc/hosts" config.<.......>[18-09-21 11:41:56]: Components client installation is complete.

- 验证测试

[root@onas ~]# source /u02/huawei/client/bigdata_env [root@onas ~]# kinit candonPassword for candon@HADOOP.COM: [root@onas ~]# klist Ticket cache: FILE:/tmp/krb5cc_0Default principal: candon@HADOOP.COMValid starting Expires Service principal09/21/2018 11:44:05 09/22/2018 11:44:01 krbtgt/HADOOP.COM@HADOOP.COM[root@onas ~]# hdfs dfsadmin -reportConfigured Capacity: 717607747591 (668.32 GB)Present Capacity: 715191830197 (666.07 GB)DFS Remaining: 713422566437 (664.43 GB)DFS Used: 1769263760 (1.65 GB)DFS Used%: 0.25%Under replicated blocks: 0Blocks with corrupt replicas: 0Missing blocks: 0Missing blocks (with replication factor 1): 0

5.2 NFS客户端安装配置

- NTP服务配置

[root@hdp03 ~]# vi /etc/ntp.confserver 192.168.120.140 key 1 prefer burst iburst minpoll 4 maxpoll 4restrict -4 default kod notrap nomodify nopeer noqueryrestrict -6 default kod notrap nomodify nopeer noqueryrestrict 127.0.0.1restrict ::1tinker step 0tinker panic 0disable monitordisable kernelenable authkeys /etc/ntp/ntpkeystrustedkey 1requestkey 1controlkey 1--启动ntp服务[root@hdp03 ~]# systemctl start ntpd[root@hdp03 ~]# systemctl enable ntpd

- HOSTS配置

[root@hdp03 ~]# vi /etc/hosts192.168.110.158 hwd02192.168.110.160 hwd04192.168.110.161 hwd05192.168.110.157 hwd01192.168.110.159 hwd03192.168.120.140 casserver

- 挂载NFS共享

[root@hdp03 ~]# mount onas:/u02 /u02

- 验证测试

[root@hdp03 ~]# kinit candonPassword for candon@HADOOP.COM: [root@hdp03 ~]# klist Ticket cache: FILE:/tmp/krb5cc_0Default principal: candon@HADOOP.COMValid starting Expires Service principal09/21/2018 12:38:02 09/22/2018 12:37:59 krbtgt/HADOOP.COM@HADOOP.COM[root@hdp03 ~]# beeline Connecting to jdbc:hive2://192.168.110.157:24002,192.168.110.158:24002,192.168.110.159:24002/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2;sasl.qop=auth-conf;auth=KERBEROS;principal=hive/hadoop.hadoop.com@HADOOP.COMDebug is true storeKey false useTicketCache true useKeyTab false doNotPrompt false ticketCache is null isInitiator true KeyTab is null refreshKrb5Config is false principal is null tryFirstPass is false useFirstPass is false storePass is false clearPass is falseAcquire TGT from CachePrincipal is candon@HADOOP.COMCommit Succeeded Connected to: Apache Hive (version 1.2.1)Driver: Hive JDBC (version 1.2.1)Transaction isolation: TRANSACTION_REPEATABLE_READBeeline version 1.2.1 by Apache Hive0: jdbc:hive2://192.168.110.157:21066/> show databases;+----------------+--+| database_name |+----------------+--+| default || hivedb |+----------------+--+2 rows selected (0.74 seconds)0: jdbc:hive2://192.168.110.157:21066/>

转载地址:http://oraum.baihongyu.com/

你可能感兴趣的文章

c# 的传递参数值传递与传递引用的区别,ref与out区别

查看>>

win7+vs2008+cuda5.x 环境配置二

查看>>

PHP5.5安装PHPRedis扩展

查看>>

c#Socket Tcp服务端编程

查看>>

java构造函数注意点

查看>>

Asp.net 中配置 CKEditor和CKFinder

查看>>

Use dynamic type in Entity Framework 4.1 SqlQuery() method

查看>>

《Python CookBook2》 第四章 Python技巧 - 若列表中某元素存在则返回之 && 在无须共享引用的条件下创建列表的列表...

查看>>

redhat网卡设置

查看>>

javascript 的作用域

查看>>

JFinal极速开发框架使用笔记(二) 两个问题,一个发现

查看>>

AutoCompleteTextView

查看>>

SecureCRT生成序列

查看>>

Android 应用程序主框架搭建

查看>>

2012腾讯春季实习生面试经历(二)

查看>>

用Bootstrap框架弹出iframe页面 在弹出的模态框中载人iframe页面,bootstrapiframe

查看>>

2012腾讯暑期实习面经(技术类web前端)

查看>>

第3种方法获取redis cluster主从关系

查看>>

注册表管理(本地、远程)

查看>>

《Linux内核设计与实现》第四周读书笔记——第五章

查看>>